How to list TCP/IP connections per remote IP, ordered by count:

$ netstat -ntu | awk '{print $5}' | cut -d: -f1 -s | sort | uniq -c | sort -nk1 -r

How to list TCP/IP connections per remote IP, ordered by count:

$ netstat -ntu | awk '{print $5}' | cut -d: -f1 -s | sort | uniq -c | sort -nk1 -rDisplay certificate information:

$ ➜ openssl s_client -connect muras.eu:443

CONNECTED(00000003)

depth=2 C = US, O = Internet Security Research Group, CN = ISRG Root X1

verify return:1

depth=1 C = US, O = Let's Encrypt, CN = R3

verify return:1

depth=0 CN = ala.muras.eu

verify return:1

---

Certificate chain

0 s:CN = ala.muras.eu

i:C = US, O = Let's Encrypt, CN = R3

1 s:C = US, O = Let's Encrypt, CN = R3

i:C = US, O = Internet Security Research Group, CN = ISRG Root X1

2 s:C = US, O = Internet Security Research Group, CN = ISRG Root X1

i:O = Digital Signature Trust Co., CN = DST Root CA X3

---

Server certificate

-----BEGIN CERTIFICATE-----

MIIFZjCCBE6gAwIBAgISAytgxCG8Nfa5gbAkMQHXSwOMMA0GCSqGSIb3DQEBCwUA

MDIxCzAJBgNVBAYTAlVTMRYwFAYDVQQKEw1MZXQncyBFbmNyeXB0MQswCQYDVQQD

EwJSMzAeFw0yMjA0MDQxODA5MzFaFw0yMjA3MDMxODA5MzBaMBcxFTATBgNVBAMT

DGFsYS5tdXJhcy5ldTCCASIwDQYJKoZIhvcNAQEBBQADggEPADCCAQoCggEBALg4

9WBf1tHJNysqDl6bTKj+8no8+QSV/xqxfpcgr9uIEUTYbJtHHNFHDi1QjaufaDBG

ryZsAUO5VfxHygPH93WQc4qX3ZQoaZ7+xA4QjGwR4zJw3CqdQNXXXfoW456iIHrz

EgzSf6KctnQg8VBGhnTqE0ZZN3QTHtLoRy2J/RcTl0z48SLBS60EpeOmIzjek5X1

mii+ZznEa3R+zat9bXxVxiwhFvxS+bhClEUrFYI5I5zPOs7ByUstc2c6Tws1wW2y

R4CEsuLcwvHSH6W7dN3CPjYZ5TbuYuprGxEgYSDJRN07bipy95R4BrHiKAk6R66a

UHlho4KwK7tnjs9VSdkCAwEAAaOCAo8wggKLMA4GA1UdDwEB/wQEAwIFoDAdBgNV

HSUEFjAUBggrBgEFBQcDAQYIKwYBBQUHAwIwDAYDVR0TAQH/BAIwADAdBgNVHQ4E

FgQUObZv+7j4EQHj5orKa2O1i0Yhd7UwHwYDVR0jBBgwFoAUFC6zF7dYVsuuUAlA

5h+vnYsUwsYwVQYIKwYBBQUHAQEESTBHMCEGCCsGAQUFBzABhhVodHRwOi8vcjMu

by5sZW5jci5vcmcwIgYIKwYBBQUHMAKGFmh0dHA6Ly9yMy5pLmxlbmNyLm9yZy8w

YAYDVR0RBFkwV4IMYWxhLm11cmFzLmV1ggxkb2MubXVyYXMuZXWCEG1pa29sYWou

bXVyYXMuZXWCD21vbmljYS5tdXJhcy5ldYIIbXVyYXMuZXWCDHd3dy5tdXJhcy5l

dTBMBgNVHSAERTBDMAgGBmeBDAECATA3BgsrBgEEAYLfEwEBATAoMCYGCCsGAQUF

BwIBFhpodHRwOi8vY3BzLmxldHNlbmNyeXB0Lm9yZzCCAQMGCisGAQQB1nkCBAIE

gfQEgfEA7wB1AEHIyrHfIkZKEMahOglCh15OMYsbA+vrS8do8JBilgb2AAABf/X7

deIAAAQDAEYwRAIgL+/+47ymSnPD786/vSsLAe9DnvdPSDhzB95iDJWRjBECIAYI

AwwP6sQhB852PAq2ImsgJC0UGrmr3BodVWjnRcMFAHYARqVV63X6kSAwtaKJafTz

fREsQXS+/Um4havy/HD+bUcAAAF/9ft2BgAABAMARzBFAiAYmpaYKA4Rklxe7KF2

3faQo5WQzwIQGMG/EBHsj55bWgIhAN/AyVz5PZ5x74R1otpwH+ULFcbyodU2TjrV

tmJMi1QSMA0GCSqGSIb3DQEBCwUAA4IBAQBTRMekA7B8D3EHvHPVFsjCePvWUX1D

sDTX/HJIAZ+L7szjQLZKHvDZRuoCceikZmGV4aFIdyt+jlEQneJVFj5QCEtjjjiI

j1eTEGSnotHXRAQeW1sjtGgSLWXrRJsLJNqzLfXw25/XJgSK/KIwuvh+KI32kaYl

+95nd1FHwZshNgttC8ihTFBQWijJVV6sOeyGE3JZHWBDQfjp7kbUvGxfLIi1ziWM

6ry0+FcICtVMWwLbQi4HMxax2PvTdCCQZCrOaWiM1xQ/p4k1p3iY7fyTdl9Sr6yr

Y+m6RPgVr/JEIKWGQtWCwtqk0TzrOUwIBIw5xU1HyA5hz7vOrzxeROSM

-----END CERTIFICATE-----

subject=CN = ala.muras.eu

issuer=C = US, O = Let's Encrypt, CN = R3

---

No client certificate CA names sent

Peer signing digest: SHA256

Peer signature type: RSA-PSS

Server Temp Key: X25519, 253 bits

---

SSL handshake has read 4642 bytes and written 380 bytes

Verification: OK

---

New, TLSv1.3, Cipher is TLS_AES_256_GCM_SHA384

Server public key is 2048 bit

Secure Renegotiation IS NOT supported

Compression: NONE

Expansion: NONE

No ALPN negotiated

Early data was not sent

Verify return code: 0 (ok)

---

---

Post-Handshake New Session Ticket arrived:

SSL-Session:

Protocol : TLSv1.3

Cipher : TLS_AES_256_GCM_SHA384

Session-ID: 1A3E72C7B0EE867B11C105EFAE3C39CE4FB149B0EBCFD836792AA44161637204

Session-ID-ctx:

Resumption PSK: 5A4A7C751C76D7AED82A657151666E9AA15749C001A641871C2F70295EFCEDC9CFBE6FDDCD9C2EC151086FE17DE8222F

PSK identity: None

PSK identity hint: None

SRP username: None

TLS session ticket lifetime hint: 300 (seconds)

TLS session ticket:

0000 - 92 7a dd 24 74 a4 76 ba-76 7a 9f 79 3b 9c 35 bc .z.$t.v.vz.y;.5.

0010 - ba 5f e8 bb 82 4a 84 47-7f 9d d0 0a f9 fa ab f3 ._...J.G........

Start Time: 1652895551

Timeout : 7200 (sec)

Verify return code: 0 (ok)

Extended master secret: no

Max Early Data: 0

---

read R BLOCK

---

Post-Handshake New Session Ticket arrived:

SSL-Session:

Protocol : TLSv1.3

Cipher : TLS_AES_256_GCM_SHA384

Session-ID: B90C92D0D4BF52C38E33C8C28210D43137E0642528C4F7FEEF9F77E06D5484CF

Session-ID-ctx:

Resumption PSK: 27AFDE9BBFFCCC660EC5C73053BCB7837A002ACBD7E7233397A834439B5514CDBDE8B4FDC2002970A61A2764262ABBAA

PSK identity: None

PSK identity hint: None

SRP username: None

TLS session ticket lifetime hint: 300 (seconds)

TLS session ticket:

0000 - 59 d0 96 33 41 f2 23 a7-45 87 d3 57 e5 eb 5f ba Y..3A.#.E..W.._.

0010 - 58 18 2f 31 f6 28 ef 21-5e e6 e7 34 2f f0 43 72 X./1.(.!^..4/.Cr

Start Time: 1652895551

Timeout : 7200 (sec)

Verify return code: 0 (ok)

Extended master secret: no

Max Early Data: 0

---

read R BLOCKSend GET request to https://muras.eu and use muras.eu for SNI.

$ echo -e "GET / HTTP/1.1\r\nHost: muras.eu\r\nConnection: close\r\n\r\n" | openssl s_client -quiet -connect muras.eu:443 -servername muras.eu

depth=2 C = US, O = Internet Security Research Group, CN = ISRG Root X1

verify return:1

depth=1 C = US, O = Let's Encrypt, CN = R3

verify return:1

depth=0 CN = ala.muras.eu

verify return:1

HTTP/1.1 200 OK

Date: Wed, 18 May 2022 17:29:56 GMT

Server: Apache/2.4.48 (Ubuntu)

Last-Modified: Tue, 08 Feb 2022 17:44:05 GMT

ETag: "11c3c-5d785436c2f8e"

Accept-Ranges: bytes

Content-Length: 72764

Vary: Accept-Encoding

Connection: close

Content-Type: text/html

<!DOCTYPE html>

<html lang="en">

<head>

<meta charset="utf-8">

<meta http-equiv="X-UA-Compatible" content="IE=edge">

<meta name="viewport" content="width=device-width, initial-scale=1">

<title>Tomasz Muras</title>

<meta name="description" content="Technical blog.">

...Verify certificate chain:

# Store all certificates

$ openssl s_client -connect muras.eu:443 -showcerts > cert.pem

# Extract them into cert1.pem cert2.pem cert3.pem

# Verify

$ openssl verify -CAfile cert2.pem cert1.pem

cert1.pem: OKList of the error codes:

$ man verify

Cheatsheet for ranger - a console file manager with VI key bindings, based on heroheman.

I definitely recommend the book to anyone interested in security.

Below are some quotes I’ve enjoyed.

[…] people do logic much better if the problem is set in social role. In the Wason test, subjects are told they have to inspect some cards with a letter grade on one side, and a numerical code on the other, and given a rule such as “If a student has a grade D on the front of their card, then the back must be marked with code 3”. They are shown four cards displaying (say) D, F, 3 and 7 and then asked “Which cards do you have to turn over to check that all cards are marked correctly?” Most subjects get this wrong; in the original experiment, only 48% of 96 subjects got the right answer of D and 7. However the evolutionary psychologists Leda Cosmides and John Tooby argue found the same problem becomes easier if the rule is change to “If a person is drinking beer, he must be 20 years old” and the individuals are a beer drinker, a coke drinker, a 25-year-old and a 16 year old. Now three-quarters of subjects deduce that the bouncer should check the age of the beer drinker and the drink of 16-year-old. Cosmides and Tooby argue that our ability to do logic and perhaps arithmetic evolved as a means of policing social exchanges.

Six main classes of techniques used to influence people and close a sale:

One chain of cheap hotels in France introduced self service. You’d turn up at that hotel, swipe your credit card in the reception machine, and get a receipt with a numerical access code to unlock your room door. To keep costs down, the rooms did not have en-suite bathrooms. A common failure mode was that you’d get up in the middle of the night to go to the bathroom, forget your access code, and realise you hadn’t taken the receipt with you. So you’d have to sleep on the bathroom floor until the staff arrived the following morning.

[…] many security failures weren’t due to technical errors so much as to wrong incentives: if the people who guard a system are not the people who suffer when it fails, then you can expect trouble.

[…] suppose that there are 100 used cars for sale in a town: 50 well maintained cars worth $2000 each, and 50 “lemons” worth $1000. The sellers know which is which, but the buyers don’t. What is the market price of a used car? You might think $1500; but at that price, no good cars will be offered for sale. So the market price will be close to $1000. This is why, if you buy a new car, maybe 20% falls off the price of the second you drive it out of the dealer’s lot. […] When users can’t tell good from bad, they might as well buy the cheapest.

Typical corporate policy language:

[…] it’s inevitable that your top engineers will be so much more knowledgeable than your auditors that they could do bad things if they really wanted to.

The big audit firms have a pernicious effect on the information security world bby pushing their own list of favourite controls, regardless of the client’s real risks. They maximise their income by nit-picking and compliance; the Sarbanes-Oxley regulations cost the average US public company over $1m a year in audit fees.

The banks’ response was intrusion detection systems that tried to identify criminal businesses by correlating the purchase histories of customers who complained. By the late 1990s, the smarter crooked businesses learned to absorb the cost of the customer’s transaction. You have a drink at Mafia-owned bistro, offer a card, sign the voucher, and fail to notice when the charge doesn’t appear on your bill. A month or two later, there’s a huge bill for jewelry, electrical goods or even casino chips. By then you’ve forgotten about the bistro, and the bank never had record of it.

[…] NIST Dual-EC-DRBG, which was built into Windows and seemed to have contained as NSA trapdoor; Ed Snowden later confirmed that the NSA paid RSA $10m to use this standard in tools that many tech companies licensed.

One national-security concern is that as defence systems increasingly depend on chips fabricated overseas, the fabs might introduce extra circuitry to facilitate later attack. For example, some extra logic might cause a 64-bit multiply with two specific inputs to function as a kill switch.

Another example is that a laser pulse can create a click on a microphone, so a voice command can be given to a home assistant through a window.

Countries that import their telephone exchanges rather than building their own just have to assume that their telephone swithchgear has vulnerabilities known to supplier’s government. (During the invasion of Afghanistan in 2001, Kabul had two exchanges: an old electromechanical one and a new electronic one. The USAF bombed only the first.)

Traffic analysis - looking at the number of messages by source and destination - can also give very valuable information. Imminent attacks were signalled in World War 1 by greatly increased volume of radio messages, and more recently by increased pizza deliveries to the Pentagon.

[…] meteor burst transmission (also known as meteor scatter). This relies on the billions of micrometeorites that strike the Earth’s atmosphere each day, each leaving a long ionization trail that persists for typically a third of a second and provides a temporary transmission path between a mother station and an area of maybe a hundred miles long and a few miles wide. The mother station transmits continuously; whenever one of the daughters is within such an area, it hears mother and starts to send packets of data at high speed, to which mother replies. With the low power levels used in covert operations one can achieve an average data rate of about 50 bps, with an average latency of about 5 minutes and a range of 500 - 1500 miles. Meteor burst communications are used by special forces, and civilian applications such as monitoring rainfall in remote parts of the third world.

[…] the United States was deploying “neutron bombs” in Europe - enhanced radiations weapons that could kill people without demolishing buildings. The Soviets portrayed this as a “capitalist bomb” that would destroy people while leaving property intact, and responded by threatening a “socialist bomb” to destroy property (in the form of electronics) while leaving the surrounding people intact.

A certain level of sharing was good for business. People who got a pirate copy of a tool and liked it would often buy a regular copy, or persuade their employer to buy one. In 1998 Bill Gates even said, “Although about three million computers get sold every year in China, people don’t pay for the software. Someday they will, though. And as long as they’re going to steal it, we want them to steal ours. They’ll get sort of addicted, and then we’ll somehow figure out how to collect sometime in the next decade”

[…] one cable TV broadcast a special offer for a free T-shirt, and stopped legitimate viewers from seeing the 0800 number to call; this got them a list of the pirates’ customers.

Early in the lockdown, some hospitals didn’t have enough batteries for the respirators used by their intensive-care clinicians, now they were being used 24x7 rather than occasionally. The market-leading 3M respirators and the batteries that powered them had authentication chips, so the company could sell batteries for over $200 that cost $5 to make. Hospitals would happily have bought more for $200, but China had nationalised the factory the previous month, and 3M wouldn’t release the keys to other component suppliers.

How could the banking industry’s thirst for a respectable cipher be slaked, not just in the USA but overseas, without this cipher being adopted by foreign governments and driving up the costs of intelligence collection? The solution was the Data Encryption Standard (DES). At the time, there was controversy about whether 56 bits were enough. We know now that this was deliberate. The NSA did not at the time have the machinery to do DES keysearch; that came later. But by giving the impression that they did, they managed to stop most foreign governments adopting it. The rotor machines continues in service, in many cases reimplemented using microcontrollers […] the traffic continued to be harvested. Foreigners who encrypted their important data with such ciphers merely marked that traffic as worth collecting.

Most of the Americans who died as a result of 9/11 probably did so since then in car crashes, after deciding to drive rather than fly: the shift from flying to driving led to about 1,000 extra fatalities in the following three months alone, and about 500 a year since then.

So a national leader trying to keep a country together following an attack should constantly remind people what they’re fighting for. This is what the best leaders do, from Churchil’s radio broadcasts to Roosvelt’s fireside chats.

IBM has separated the roles of system analyst, programmer and tester; the analyst spoke to the customer and produced a design, which programmer coded, and then the tester looked for bugs in the code. The incentives weren’t quite right, as the programmer could throw lots of buggy code over the fence and hope that someone else would fix it. This was slow and led to bloated code. Microsoft abolished the distinction between analyst, programmers and testers; id had only developers, who spoke to the customer and were also responsible for fixing their own bugs. This held up the bad programmers who wrote lots of bugs, so that more of the code was produces by the more skillful and careful developers. According to Steve Maguire, this is what enabled Microsoft to win the battle to rule the world of 32-bit operating systems;

Bezos’ law says you can’t run a dev project with more people that can be fed from two pizzas.

Another factor in team building is he adoption of a standard style. One signal of poorly-managed teams is that the codebase is in a chaotic mixture of styles, with everybody doing their own thing. When a programmer checks out some code to work on it, they may spend half an hour formatting it and tweaking it into their style. Apart from the wasted time, reformatted code can trip up your analysis tools.

When you really want a protection property to hold, it’s vital that the design and implementation be subjected to hostile review. It will be eventually, and it’s likely to be cheaper if it’s done before the system is fielded. As we’ve been seening one case history after another, the motivation of the attacker is critical; friendly reviews, by people who want the system to pass, are essentially useless compared with contributions by people who are seriously trying to break it.

Working on my local Ubuntu 20.04 installation, I will set up a development environment and implement Symfony authentication with LDAP.

Citing from the the documentation I’m interested in this scenario:

Checking a user’s password against an LDAP server while fetching user information from another source (database using FOSUserBundle, for example).

Setup Symfony and create new project as per symfony.com/download:

# Make sure you have PHP extensions we will use and ldapsearch.

sudo apt install php-ldap php-sqlite3 ldap-utils

# Create new Symfony project.

symfony new ldap_test --version=5.4 --full

cd ldap_test

composer require symfony/ldap

To make it simple, I will use sqlite to store the user records, just uncomment in .env:

DATABASE_URL="sqlite:///%kernel.project_dir%/var/data.db"

Create user entity. Answer with all defaults just for “Does this app need to hash/check user passwords?” I said no.

php bin/console make:user

php bin/console make:migration

php bin/console doctrine:migrations:migrate

Create controller from a template:

php bin/console make:controller Protected

In config/packages/security.yaml make the firewall to protect the access to our newly created action:

access_control:

- { path: ^/protected, roles: ROLE_USER }

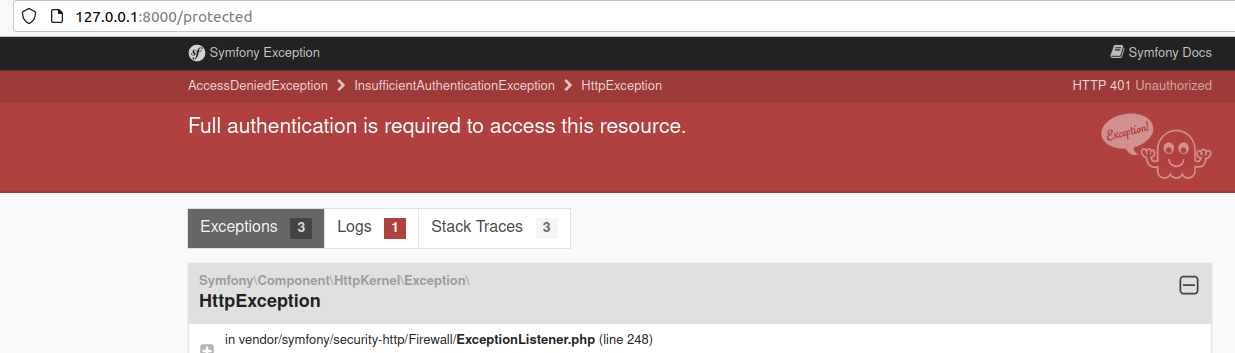

Run the server and make sure that the access to http://127.0.0.1:8000/protected is not allowed:

Let’s create sample user record in the database

sqlite> .schema user

CREATE TABLE user (id INTEGER PRIMARY KEY AUTOINCREMENT NOT NULL, email VARCHAR(180) NOT NULL, roles CLOB NOT NULL --(DC2Type:json)

);

CREATE UNIQUE INDEX UNIQ_8D93D649E7927C74 ON user (email);

sqlite> INSERT INTO user VALUES (NULL,'test@example.invalid','[ROLE_USER]');

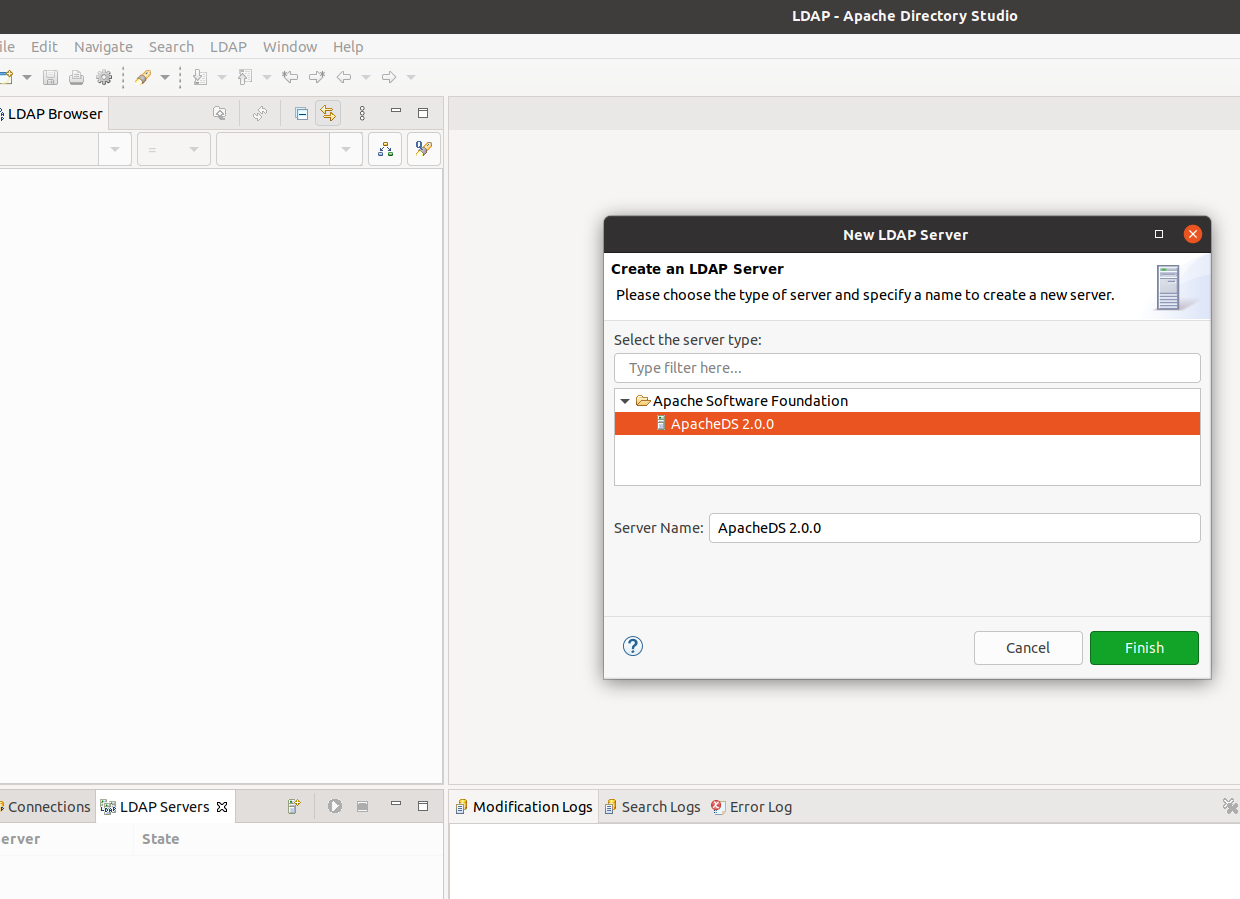

We will use Apache Directory Studio to run local LDAP server. Download it from (directory.apache.org/studio/downloads.html)[https://directory.apache.org/studio/downloads.html], extract and run.

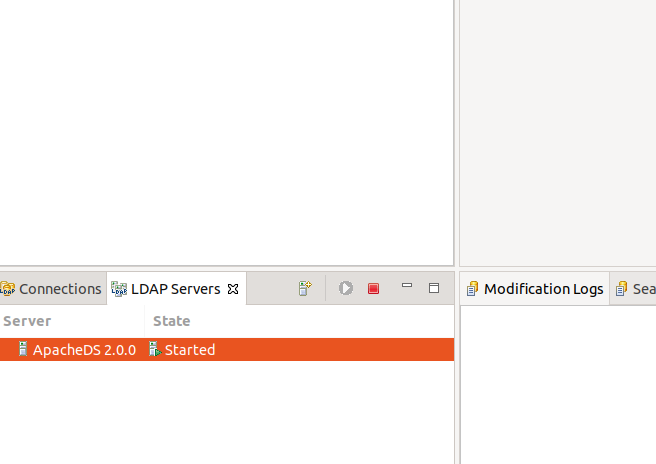

Click on the “LDAP servers” tab, create new server.

Then start it.

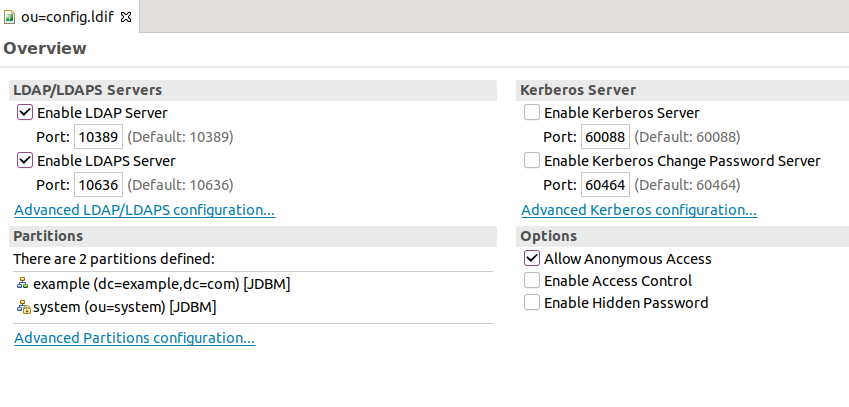

When you double-click it, you can see that its running on port 10389:

Right click it and “Create a connection”. Let’s create sample user. Download Sample LDAP LDIF file. In LDAP browser tab, right-click the root node, select import and choose the file.

Let’s test the connection using ldapsearch:

$ ldapsearch -H ldap://localhost:10389 -D 'cn=test@example.invalid,dc=example,dc=com' -w xyz sn=Muras

# extended LDIF

#

# LDAPv3

# base <> (default) with scope subtree

# filter: sn=Muras

# requesting: ALL

#

# Tomek Muras, example.com

dn: cn=Tomek Muras,dc=example,dc=com

mail: test@example.invalid

sn: Muras

cn: Tomek Muras

objectClass: top

objectClass: inetOrgPerson

objectClass: person

objectClass: organizationalPerson

userPassword:: e1NTSEF9WmtQNm9NTGFTK1hiZ2c5RG1WODg4T2FmaktUTXhCNkpGSEVrUWc9PQ=

=

uid: tmuras

# search result

search: 2

result: 0 Success

# numResponses: 2

# numEntries: 1

Add login form, as per symfony.com/doc/5.4/security.html. I have put login action in the same ProtectedController:

/**

* @Route("/login", name="login")

*/

public function login(AuthenticationUtils $authenticationUtils): Response

{

// get the login error if there is one

$error = $authenticationUtils->getLastAuthenticationError();

// last username entered by the user

$lastUsername = $authenticationUtils->getLastUsername();

return $this->render('protected/login.html.twig', [

'last_username' => $lastUsername,

'error' => $error,

]);

}

Create service in Symfony, based on symfony.com/doc/5.4/security/ldap.html, add to config/services.yaml:

Symfony\Component\Ldap\Ldap:

arguments: ['@Symfony\Component\Ldap\Adapter\ExtLdap\Adapter']

tags:

- ldap

Symfony\Component\Ldap\Adapter\ExtLdap\Adapter:

arguments:

- host: localhost

port: 10389

encryption: false

options:

protocol_version: 3

referrals: false

Update config/packages/security.yaml:

security:

enable_authenticator_manager: true

# https://symfony.com/doc/current/security.html#registering-the-user-hashing-passwords

password_hashers:

Symfony\Component\Security\Core\User\PasswordAuthenticatedUserInterface: 'auto'

# https://symfony.com/doc/current/security.html#loading-the-user-the-user-provider

providers:

# used to reload user from session & other features (e.g. switch_user)

app_user_provider:

entity:

class: App\Entity\User

property: email

firewalls:

dev:

pattern: ^/(_(profiler|wdt)|css|images|js)/

security: false

main:

lazy: true

provider: app_user_provider

form_login_ldap:

service: Symfony\Component\Ldap\Ldap

dn_string: 'cn={username},dc=example,dc=com'

login_path: login

check_path: login

# Easy way to control access for large sections of your site

# Note: Only the *first* access control that matches will be used

access_control:

- { path: ^/protected, roles: ROLE_USER }

Now we can access the protected resource, once valid LDAP password is entered:

The relevant logs during the login:

[PHP ] [Sun Dec 12 16:03:34 2021] 127.0.0.1:39400 Accepted

[PHP ] [Sun Dec 12 16:03:34 2021] 127.0.0.1:39400 [302]: POST /login

[PHP ] [Sun Dec 12 16:03:34 2021] 127.0.0.1:39400 Closing

[PHP ] [Sun Dec 12 16:03:34 2021] 127.0.0.1:39404 Accepted

[Web Server ] Dec 12 16:03:34 |INFO | SERVER POST (302) /login host="127.0.0.1:8004" ip="127.0.0.1" scheme="https"

[Web Server ] Dec 12 16:03:34 |INFO | SERVER GET (200) /protected

[PHP ] [Sun Dec 12 16:03:34 2021] 127.0.0.1:39404 [200]: GET /protected

[PHP ] [Sun Dec 12 16:03:34 2021] 127.0.0.1:39404 Closing

[PHP ] [Sun Dec 12 16:03:34 2021] 127.0.0.1:39406 Accepted

[PHP ] [Sun Dec 12 16:03:34 2021] 127.0.0.1:39406 [200]: GET /_wdt/f011c6

[Web Server ] Dec 12 16:03:34 |INFO | SERVER GET (200) /_wdt/f011c6 ip="127.0.0.1"

[PHP ] [Sun Dec 12 16:03:34 2021] 127.0.0.1:39406 Closing

[Application] Dec 12 16:03:34 |INFO | REQUES Matched route "login". method="POST" request_uri="http://127.0.0.1:8000/login" route="login" route_parameters={"_controller":"App\\Controller\\ProtectedController::login","_route":"login"}

[Application] Dec 12 16:03:34 |DEBUG | SECURI Checking for authenticator support. authenticators=1 firewall_name="main"

[Application] Dec 12 16:03:34 |DEBUG | SECURI Checking support on authenticator. authenticator="Symfony\\Component\\Ldap\\Security\\LdapAuthenticator"

[Application] Dec 12 16:03:34 |DEBUG | DOCTRI SELECT t0.id AS id_1, t0.email AS email_2, t0.roles AS roles_3 FROM user t0 WHERE t0.email = ? LIMIT 1 0="test@example.invalid"

[Application] Dec 12 16:03:34 |INFO | PHP User Deprecated: Since symfony/ldap 5.3: Not implementing the "Symfony\Component\Security\Core\User\PasswordAuthenticatedUserInterface" interface in class "App\Entity\User" while using password-based authenticators is deprecated.

[Application] Dec 12 16:03:34 |INFO | PHP User Deprecated: Since symfony/security-http 5.3: Not implementing the "Symfony\Component\Security\Core\User\PasswordAuthenticatedUserInterface" interface in class "App\Entity\User" while using password-based authentication is deprecated.

[Application] Dec 12 16:03:34 |INFO | SECURI Authenticator successful! authenticator="Symfony\\Component\\Security\\Http\\Authenticator\\Debug\\TraceableAuthenticator" token={"Symfony\\Component\\Security\\Core\\Authentication\\Token\\UsernamePasswordToken":"UsernamePasswordToken(user=\"test@example.invalid\", authenticated=true, roles=\"ROLE_USER\")"}

[Application] Dec 12 16:03:34 |DEBUG | SECURI The "Symfony\Component\Security\Http\Authenticator\Debug\TraceableAuthenticator" authenticator set the response. Any later authenticator will not be called

[Application] Dec 12 16:03:34 |DEBUG | SECURI Stored the security token in the session. key="_security_main"

The full code is available on github.com/tmuras/symfony-ldap-authentication.

OWASP Top 10:2021 is out there!

I couldn’t find the PDF version of the list exported, so I’ve generated one.

I have a file sql.gz that is a big MySQL dump, so I do not want to extract it when importing.

I would also like to execute a command before the dump get’s imported. In my case I want to disable binary logging for the session:

SET sql_log_bin = 0;

Here is how this may be done in shell:

{ echo 'SET sql_log_bin = 0;' ; gzip -dc sql.gz ; } | mysql

I didn’t test it with huge sql.gz files, I’m not sure if the performance is different than executing gzip -dc sql.gz directly.

atop is an extremely useful top-like utility that shows the current state of the system.

To try it out run “atop” and review its different output modes by pressing: m (memory), d (disk), n (network), s (scheduling), v (various), c (command line), u (per user), p (per program), y (toggle threads).

On top of its interactive, top-like (but top on steroids!) functionality, atop can capture (log) system state snapshots and then review them.

To start the recording use -w option with a file name and then the interval (in seconds) for each refresh, i.e.:

atop -w log.atop 1Later on, read the data from the log file:

atop -r log.atopJump one interval forward by pressing “t”, to go backward press “T”. To jump to a specified time, press “b”.

Ubuntu package contains atop.service which will log the samples into /var/log/atop/atop_YYYYMMDD log files with interval 600.

The idea is that a machine hosted anywhere - i.e. behind the NAT, with no public IP - will establish SSH tunnel to publicly available server. The only required connectivity is access to the server IP & port.

First install autossh and generate public/private keys.

apt install autossh

ssh-keygenLet’s say that:

Create /etc/systemd/system/autossh.service with the content below.

[Unit]

Description=AutoSSH to My Server

After=network.target

[Service]

Environment="AUTOSSH_GATETIME=0"

ExecStart=/usr/bin/autossh -N -M 0 -o "ExitOnForwardFailure=yes" -o "ServerAliveInterval=180" -o "ServerAliveCountMax=3" -o "PubkeyAuthentication=yes" -o "PasswordAuthentication=no" -i /root/.ssh/id_rsa -R 6668:localhost:22 tunnel@server.muras.eu -p 10001

Restart=always

[Install]

WantedBy=multi-user.targetThen enable the service and reboot to make sure it works automatically.

systemctl enable autossh

rebootCreate user tunnel that has no interactive shell session (-s /bin/false) but create its home directory (-m).

useradd -d /home/tunnel -s /bin/false -m tunnelAdd to /home/tunnel/.ssh/authorized_keys the public key of the root user from our client (/root/.ssh/id_rsa.pub )

no-pty,no-X11-forwarding,permitopen="localhost:6668",command="/bin/echo do-not-send-commands" ssh-rsa abcdef....To access client machine, login to server and run:

ssh -p 6668 tunnel@127.0.0.1