Thanks to Mozilla’s llamafile it is now super easy to run LLM locally.

Install and register ape as per https://github.com/Mozilla-Ocho/llamafile#gotchas.

sudo wget -O /usr/bin/ape https://cosmo.zip/pub/cosmos/bin/ape-$(uname -m).elf

sudo chmod +x /usr/bin/ape

sudo sh -c "echo ':APE:M::MZqFpD::/usr/bin/ape:' >/proc/sys/fs/binfmt_misc/register"

sudo sh -c "echo ':APE-jart:M::jartsr::/usr/bin/ape:' >/proc/sys/fs/binfmt_misc/register"Download the model from Hugging Face, make it executable and run it:

curl -LO https://huggingface.co/jartine/llava-v1.5-7B-GGUF/resolve/main/llava-v1.5-7b-q4-server.llamafile

chmod +x llava-v1.5-7b-q4-server.llamafile

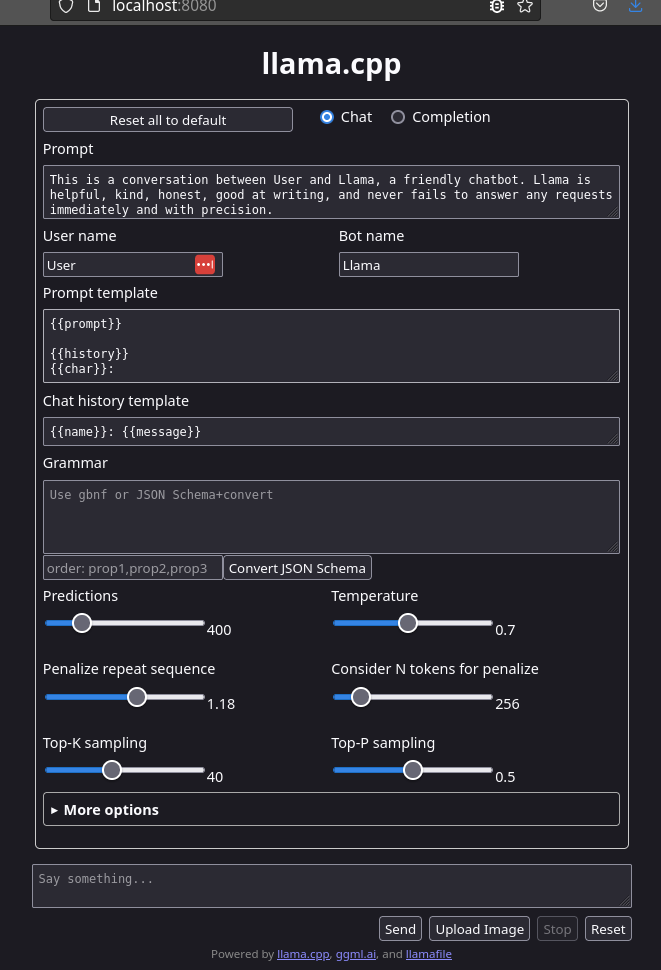

./llava-v1.5-7b-q4-server.llamafileOpen http://127.0.0.1:8080.