The problem

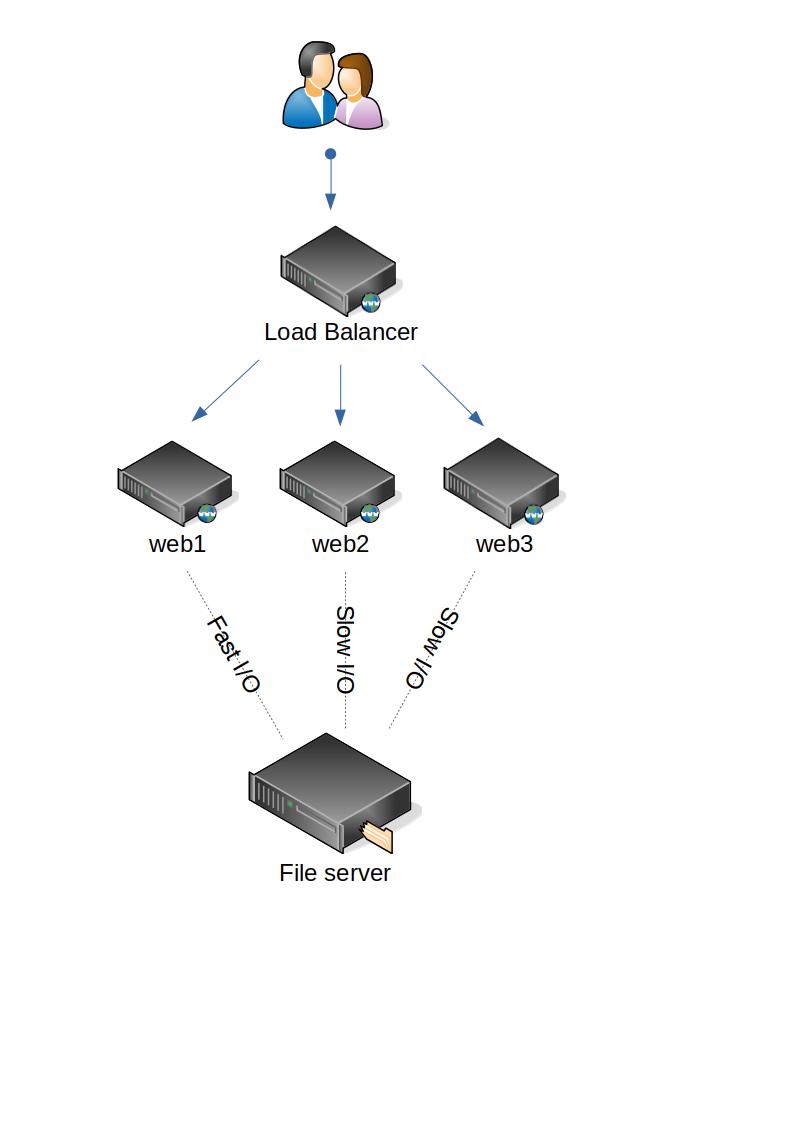

We have a typical web application that is deployed behind a load balancer with several web servers behind. The web servers are nearly identical - running the same OS, code, packages. But they do differ in one way - some have a quick access to the external asset’s repository. Other web servers do have the access as well but it is much slower. It looks something like this:

Moodle context

The web application is Moodle. It’s configured to use file system repository to allow access to the assets server. One of the web servers (web1) is in a co-located network with the fast access to the storage. Access from web2 and web3 is still possible (and configured but much slower). It would be benefictial if all the requests that trigger an access to the external file server were routed through web1 server. Practically it means that we want to send all requests to /repository/* scripts to web1.

The solution

HAProxy can do exactly what we need:

- If a request is to http://

/repository/* then route it to web1. - Let’s not compromise high availablility - in case our preferred web1 is down, send the request above to web2 or web3.

- Send all the other requests to web1 (no point in making web1 dedicated for the repository-requests only), web2 or web3.

We start HAProxy configuration with the frontend section. Custom acl rule called below “url_file” will match the requests starting with the path /repository/. If acl condition is met, we send the request to backend called “file_servers”. Otherwise the request goes to the default backend called “all_servers”.

frontend http-in

bind *:80

acl url_file path_beg /repository/

default_backend all_servers

use_backend file_servers if url_fileThe definition of our backend all_servers. Nothing really unusual here - just we send a little less (16 instead of 32) max conections to the web1 - as this one will be a bit more busy serving additional requests.

backend all_servers

server web1 10.0.3.225:80 maxconn 16 check

server web2 10.0.3.148:80 maxconn 32 check

server web3 10.0.3.50:80 maxconn 32 checkIn “file_servers” backend we want to use web1 server only, unless it’s down. Only then other web servers will take over - this is done with a “backup” option of HAProxy configuration:

backend file_servers

server web1 10.0.3.225:80 maxconn 32 check

server web2 10.0.3.148:80 maxconn 32 check backup

server web3 10.0.3.50:80 maxconn 32 check backupThe configuration works as expected. The requests to http://