Introduction

I’ve been working for some time on rewriting Global Search feature for Moodle. This is basically a search functionality that would span different regions of Moodle. Ideally it should allow to search everywhere within Moodle: forums, physical documents attached as resources, etc. The implementation should work in PHP, so as a search engine I’ve decided to use Zend’s implementation of Lucene. The library unfortunately doesn’t seem to be actively maintained – there were very few changes in SVN log – practically there was no development of Search Lucene since November 2010 (few entries in 2011 are just fixing typos or updating copyright date). The bug tracker is also full of Lucene issues and very little activity.

Having said that, I didn’t find any other search engine library implemented natively in PHP, so Zend_Search_Lucene it is! (please, please let me know if you know any alternatives)

Zend Lucene indexing performance-related settings

There are only 2 variables that can be changed to affect the performance of indexing:

- $maxBufferedDocs

- $maxMergeDocs

maxBufferedDocs

From the documentation:

Number of documents required before the buffered in-memory documents are written into a new Segment Default value is 10

This simply means that every $maxBufferedDocs times you use addDocument() function, the index will be commited. Commiting requires obtaining write lock to the Lucene index.

So it should be straightforward: the smaller the value is, the less often index is flushed – therefore: overall performance (e.g. number of documents indexed per second) is higher but the memory footprint is bigger.

maxMergeDocs

The documentation says:

mergeFactor determines how often segment indices are merged by addDocument(). With smaller values, less RAM is used while indexing, and searches on unoptimized indices are faster, but indexing speed is slower. With larger values, more RAM is used during indexing, and while searches on unoptimized indices are slower, indexing is faster. Thus larger values (> 10) are best for batch index creation, and smaller values (< 10) for indices that are interactively maintained.

So it seems it’s pretty simple – for initial indexing we should set maxMergeDocs as high as possible and then lower it when more content is added to the index later on. With maxBufferedDocs we should simply find a balance between speed & memory consumption.

Testing indexing speed

I’ve tested various settings with my initial code for Global Search. As a test data I’ve created Moodle site with 1000 courses (really 999 courses as I didn’t use course id=1 – a frontpage course in Moodle). Each course has 10 sections and there is 1 label inside each section. That is: 10 labels per course (note: number of courses and sections is not really relevant for testing indexing speed).

Each label is about 10k long simple HTML text randomly generated, based on the words from “Hitchhiker’s guide to the galaxy”. Here is a fragment of a sample label text (DB column intro):

<h2>whine the world, so far an inch wide, and</h2> <h2>humanoid, but really knows all she said. - That</h2> <span>stellar neighbour, Alpha Centauri for half an interstellar distances between different planet. Armed intruders in then turned it as it was take us in a run through finger the about is important. - shouted the style and decided of programmers with distaste at the ship a new breakthrough in mid-air and we drop your white mice, - of it's wise Zaphod Beeblebrox. Something pretty improbable no longer be a preparation for you. - Come off for century or so, - The two suns! It is. (Sass:</span> [...9693 characters more...]

The intro and the name of a label is index. The total amount of data to index is about 100MB, exactly: 104,899,975 (SELECT SUM( CHAR_LENGTH( `name` ) ) + SUM( CHAR_LENGTH( `intro` ) ) FROM `mdl_label`) in 9990 labels. (Note for picky ones: no, there are no multi-byte characters there).

I’ve tested it on my local machine running: 64 bit Ubuntu 11.10, apache2-mpm-prefork (2.2.20-1ubuntu1.2), mysql-server-5.1 (5.1.61-0ubuntu0.11.10.1), php5 (5.3.6-13ubuntu3.6) with php5-xcache (1.3.2-1). Hardware: Intel Core i7-2600K @ 3.40GHz, 16GB RAM.

The results:

| Time | maxBufferedDocs | mergeFactor |

| 1430.1 | 100 | 10 |

| 1464.7 | 300 | 400 |

| 1471.1 | 200 | 10 |

| 1540.9 | 200 | 100 |

| 1543.3 | 300 | 100 |

| 1549.7 | 200 | 200 |

| 1557.5 | 100 | 5 |

| 1559.3 | 300 | 200 |

| 1560.4 | 300 | 300 |

| 1577.0 | 200 | 300 |

| 1578.9 | 50 | 10 |

| 1581.5 | 200 | 5 |

| 1584.6 | 300 | 50 |

| 1586.6 | 300 | 10 |

| 1589.3 | 200 | 50 |

| 1591.2 | 200 | 400 |

| 1616.7 | 100 | 50 |

| 1742.2 | 50 | 5 |

| 1746.4 | 400 | 5 |

| 1770.7 | 400 | 10 |

| 1776.1 | 300 | 5 |

| 1802.3 | 400 | 50 |

| 1803.9 | 400 | 200 |

| 1815.7 | 50 | 50 |

| 1830.7 | 400 | 100 |

| 1839.4 | 400 | 400 |

| 1854.9 | 100 | 300 |

| 1870.1 | 400 | 300 |

| 1894.1 | 100 | 100 |

| 1897.2 | 100 | 200 |

| 1909.7 | 100 | 400 |

| 1924.4 | 10 | 10 |

| 1955.1 | 10 | 50 |

| 2133.4 | 5 | 10 |

| 2189.0 | 10 | 5 |

| 2257.6 | 10 | 100 |

| 2269.8 | 50 | 100 |

| 2282.7 | 5 | 50 |

| 2393.5 | 5 | 5 |

| 2466.8 | 5 | 100 |

| 2979.4 | 10 | 200 |

| 3146.8 | 5 | 200 |

| 3395.9 | 50 | 400 |

| 3427.9 | 50 | 200 |

| 3471.9 | 50 | 300 |

| 3747.0 | 10 | 300 |

| 3998.1 | 5 | 300 |

| 4449.8 | 10 | 400 |

| 5070.0 | 5 | 400 |

The results are not what I would expect – and definitely not what the documentation suggests: increasing both values should decrease total indexing time. In fact, I was so surprised that the first thing I suspected was that my tests were invalid because of something on the server affecting the performance. So I’ve repeated few tests:

| First test | Second test | maxBufferedDocs | mergeFactor |

| 1430.1 | 1444.9 | 100 | 10 |

| 1464.7 | 1490.6 | 300 | 400 |

| 1471.1 | 1491.1 | 200 | 10 |

| 1540.9 | 1593.5 | 200 | 100 |

| 1894.1 | 1867.7 | 100 | 100 |

| 1924.4 | 1931.2 | 10 | 10 |

| 1909.7 | 1920.4 | 100 | 400 |

| 5070.0 | 5133.3 | 5 | 400 |

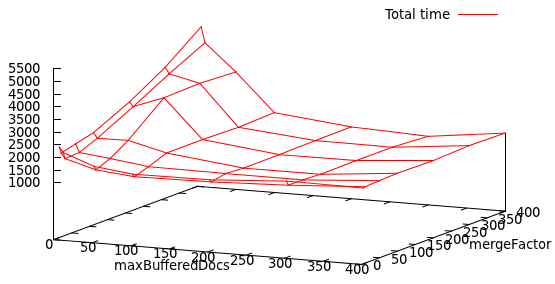

The tests look OK! Here is a 3d graph of the results (lower values are better):

Explaining the results would require more analysis of the library implementation but for end-users like myself, it makes the decision very simple: maxBufferedDocs should be set to 100, mergeFactor to 10 (default value). As you can see on the graph, once you set maxBufferedDocs to 100, both settings don’t really make too much of a difference (the surface is flat). Setting both higher will only increase the memory usage.

With those settings, on the commodity hardware, the indexing speed was 71kB text per second (7 big labels per second). The indexing process is clearly cpu bound, further optimization would require optimizing the Zend_Search_Lucene code.

Testing performance degradation

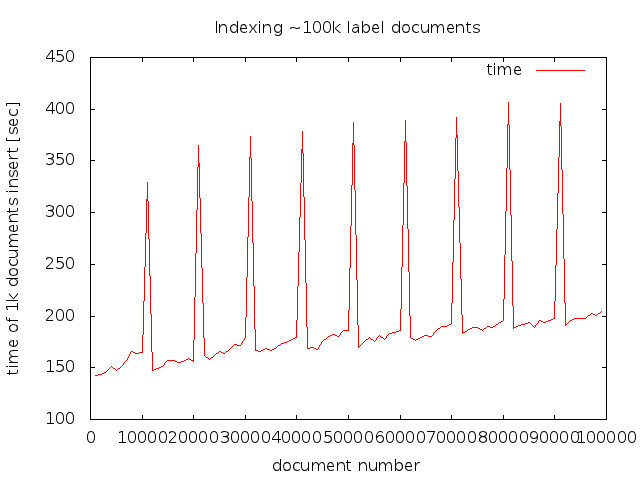

The next thing to check is does the indexing speed degrade over the time. The speed of 71 kB/sec may be OK but if it degrades much over the time, then it may slow down to unacceptable values. To test it I’ve created ~100k labels of the total size 1,049,020,746 (1GB) and run the indexer again. The graph below shows the times it took to add each 1000 documents.

The time to add a single document is initially 0.05 sec and it keeps growing up to 0.15 at the end (100k documents). There is a spike every 100 documents, related to the value of maxBufferedDocs. But there are also bigger spikes in processing time 1,000 documents, then even bigger every 10,000. I think that this is caused by Zend_Lucene merging documents into single segment but I didn’t study the code deeply enough to be 100% sure.

It took in total 5.5h to index 1GB of data. The average throughput dropped from 73,356 bytes/sec (when indexing 100MB) to 53,903 bytes/sec (indexing 1GB of text).

The bottomline is that the speed of indexing keeps decreasing as the index grows but not significantly.

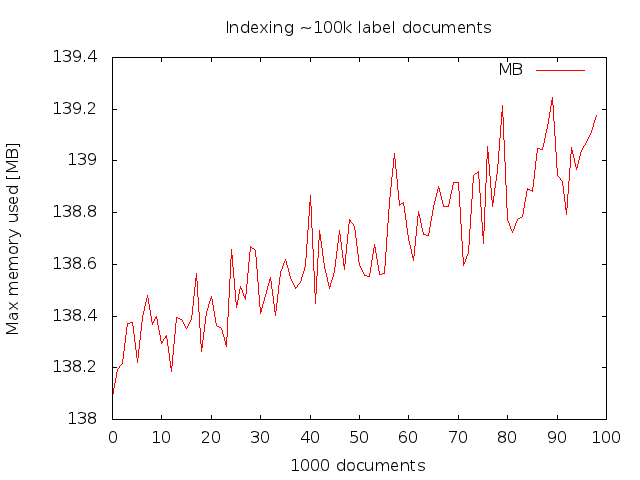

The last thing to check is the memory consumption. I checked the memory consumption after every document indexed then for each group of 1000 document I graphed the maximum memory used (the current memory used will keep jumping).

The maximum peak memory usage does increase but very slowly (1MB after indexing 100k documents).